Data science and machine learning approaches to catalysis

Lauren Smith

Jan 8, 2024

Source: Maya Bhat

"If you're not yet learning about the ways data science and machine learning are impacting catalysis today, it's time to find where it is going to have an impact on your work," says John Kitchin.

Kitchin, a professor of chemical engineering, is a leader in the space and recognizes that it can be challenging to keep up with the pace of new work. At the American Institute of Chemical Engineers annual meeting in November, Kitchin gave a keynote address for the Catalysis and Reaction Engineering division.

In his talk, titled "Data science and machine learning approaches to catalysis," Kitchin offered three perspectives on how his group has incorporated data science (DS) and machine learning (ML) into their catalysis research over the past five years. "I wanted to illustrate what kinds of things are possible when you think broadly about data science and machine learning," he says.

I wanted to illustrate what kinds of things are possible when you think broadly about data science and machine learning.

John Kitchin, Professor, Chemical Engineering

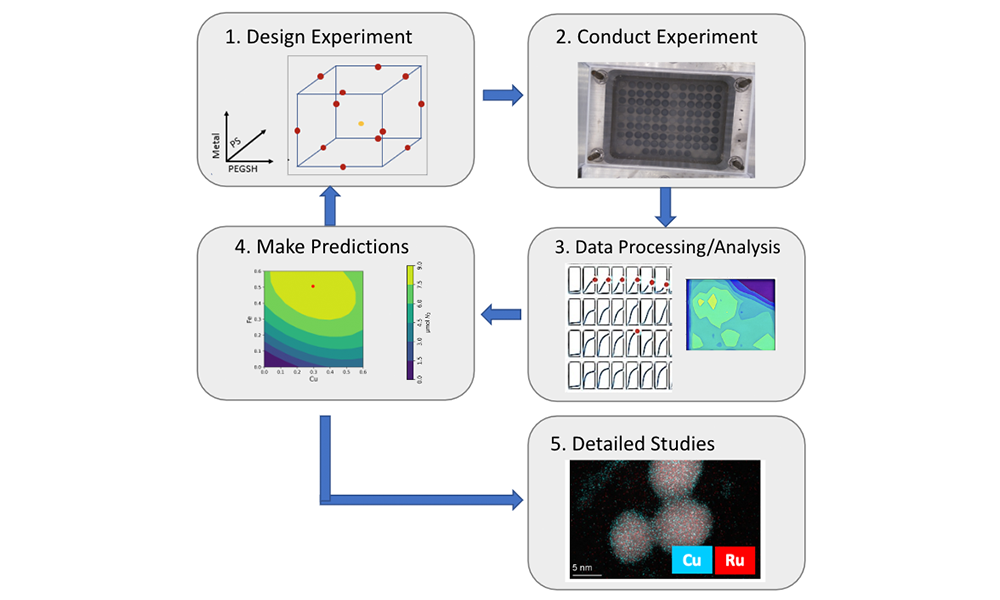

Kitchin presented an example of using DS/ML with high throughput experimentation. Discovering new catalysts by doing data-directed, machine-learning augmented or accelerated synthesis of materials is a common dream in the field. Kitchin and his collaborators focused on the data-directed synthesis of multicomponent materials for light-driven hydrogen production from oxygenates. They found that there was no way to get a single model to be predictive across the composition space of metals and variations. "We did tens of thousands of experiments. We learned lots of interesting things, but that didn't happen because of the ML closed-loop cycle we designed," says Kitchin.

The researchers did find that traditional design of experiments was very effective in finding the optimal conditions for stabilizing particles. "The results are a reminder that complex systems are complex," says Kitchin. "You cannot always use density-functional theory or ML to force a way through."

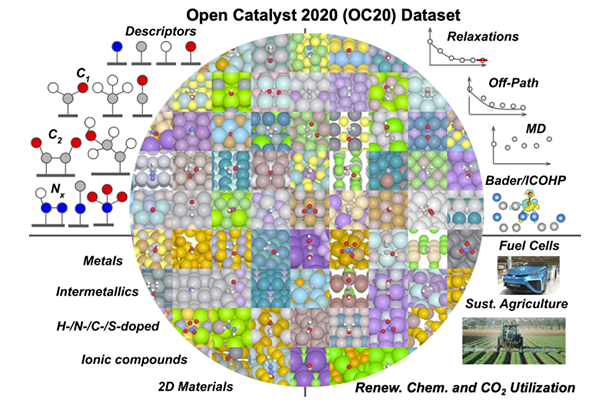

Kitchin also presented some of his work with large graph neural network machine-learned potentials. These occupy a space between density-functional theory (DFT) and force fields. They are faster and less expensive than DFT while offering more room for development than force fields. Machine-learned potentials are enabling many simulation methods that weren't possible before.

Source: Open Catalyst Project tutorial notebook, https://github.com/Open-Catalyst-Project/ocp/blob/main/tutorials/OCP_Tutorial.ipynb

The current generation of Kitchin's work in this area is a collaboration with Meta, in the Open Catalyst Project. "We use graph neural networks to take an atomic system, represent it as a graph, build a set of features, and then train those features to predict properties like energy, forces, or electron densities," explains Kitchin. The state-of-the-art set of models have been trained on millions of DFT calculations.

Lastly, Kitchin presented an application that is a result of the frameworks underlying his first two perspectives. "The biggest change that I've seen in the last five years is the development of automatic differentiation as a commodity programming tool," he says. Automatic differentiation (AD) is an approach to compute derivatives of programs.

Source: John Kitchin

Previously hidden deep in compiled libraries that required a skill level beyond most researchers, AD is now a common feature in programming languages like Python and Julia. This new paradigm of scientific programming is changing what researchers can do with DS/ML.

"My point of view is that ML is just a new way for model building. A few decades of research converged with a few decades of software and hardware development," says Kitchin. Today, models can be built and in many cases trained on a laptop or desktop. Less specialized knowledge is required, and Kitchin encourages researchers to learn about DS/ML. He also cautions that it is not a panacea. Educational, technical, and communication challenges remain, and Kitchin is continuing his work to address them.

For media inquiries, please contact Lauren Smith at lsmith2@andrew.cmu.edu.